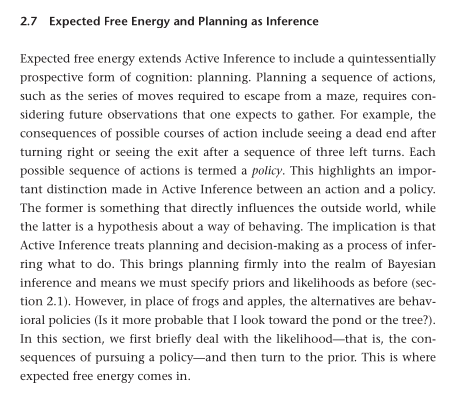

Free energy principle, Active inference

Questions for modeling a currency

- What are the roles envisaged?

- What is the ideal participant?

- What can we say about them?

- What other vantage points are relevant? (Miners, the system as a whole...)

- What is the ideal participant?

- What is observed and what is inferred?

- What is exploration and what is exploitation?

- What are the action steps that comprise a policy?

- What is the decision point where policies are compared?

Steph's answers

- Roles: Economic agent, miner, Arbitrager (between Quai and Qi), AI cost function

- Ideal participant: Economic agent. One who needs to decide a policy and actions while valuing their tradeoffs and opportunity cost

- Observed: The price of Qi as defined by arbitragers (the market)

- Inferred: The policy of best action

- Exploration: seeking the best ROI based on policy

- Exploitation: knowing when the price of Qi is improperly valued by the market of arbitragers

- Action steps comprising policy: Modeling potential policies in tradeoff landscape, depth of following a particular policy accurately, electing a policy and having patience to observe it play out over years.

- Decision point: When your model has an expectation of a perverse outcome and that outcome is realized. "Expecting to see a black swan and then seeing it"

Problems with Active Inference approach

- Active Inference focuses on {$P$}, thus can't consider {$Q$} separately.

- Active Inference focuses on invoking Bayes's theorem to calculate and update beliefs {$P$}

- For expected inference energy, it compares {$Q$} and {$s_\tau$} with {$P$} and {$o_\tau$}, rather than distilling values {$R$} and {$r_\tau$}.

- There could be {$100,000,000,000$} neurons expressing what as {$P$}, and {$100,000$} cortical columns expressing how as {$Q$}, and roughly {$10$} values expressing why as {$R$}. (Indeed, these could be {$8$} mental contexts.

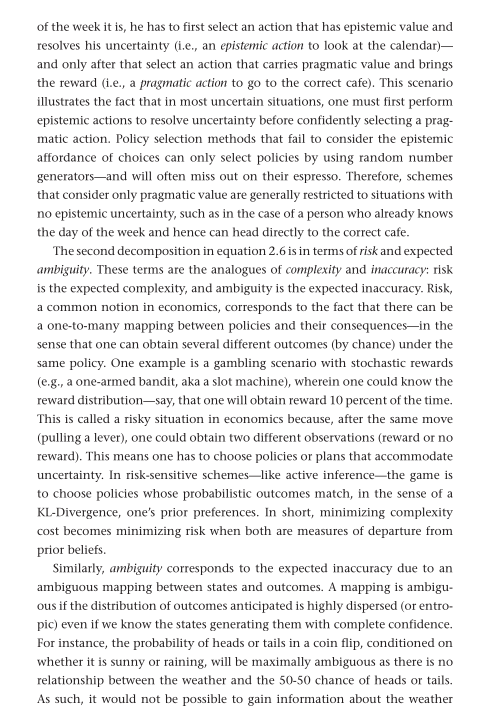

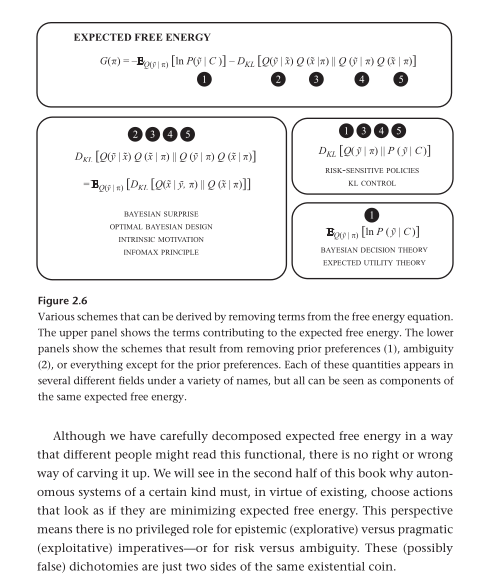

Expected Free Energy (of a policy)

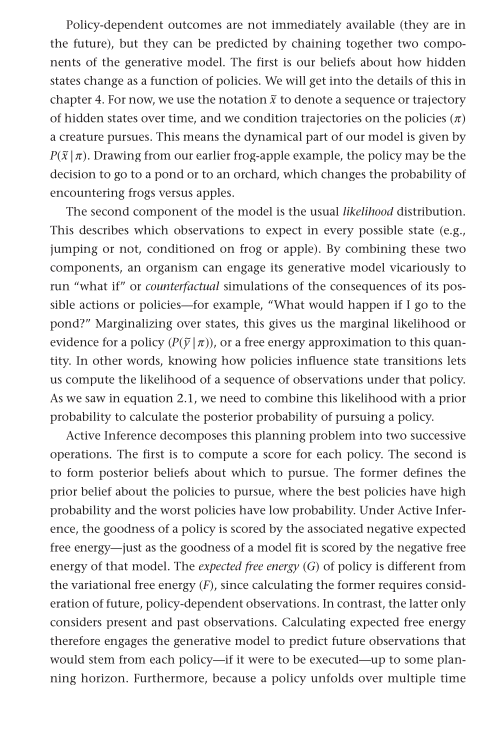

Policy {$\pi$} is a sequence of actions, whereas {$\tilde{x},\tilde{y}$} are sequences of observations. This brings to mind the hippocampus, or entorhinal cortex, of a rat, as it traverses a path, and has or foresees sensations along the way.

- Action: direct influence upon the outside world

- Policy {$\pi$}: an imagined possible sequence of actions, thus the formulation of a hypothesis about a way of behaving (and its consequences)

- Planning and decision-making: a process of inferring what to do

Counterfactual (what if) simulations depend on:

- our beliefs about how hidden states change as a function of policies (There is a sequence or trajectory {$\tilde{x}$} of hidden states over time.) (How will the world change based on what we do.)

- we are interested in the dynamical part of our model: the marginal likelihood or evidence for a policy {$P(\tilde{x} | \pi)$} (What we expect to see based on what we do.)

- likelihood distribution: which observations to expect in every possible state

Combine

- likelihood: consequences of pursuing a policy

- prior probability:

to calculate

- posterior probability of pursuing a policy

- compose a score for each policy: define the prior beliefs about policies, where best policies have high probability (score with the negative expected free energy)(this is the logarithm of a probability, which is comparable to how potential energy is written)

- form posterior beliefs about which policy to pursue (exponentiate the logarithm to get the probability distribution, the belief, and normalize it, so that {$\sum_{\tilde{x}} P(\tilde{x}|\pi)=1$} )

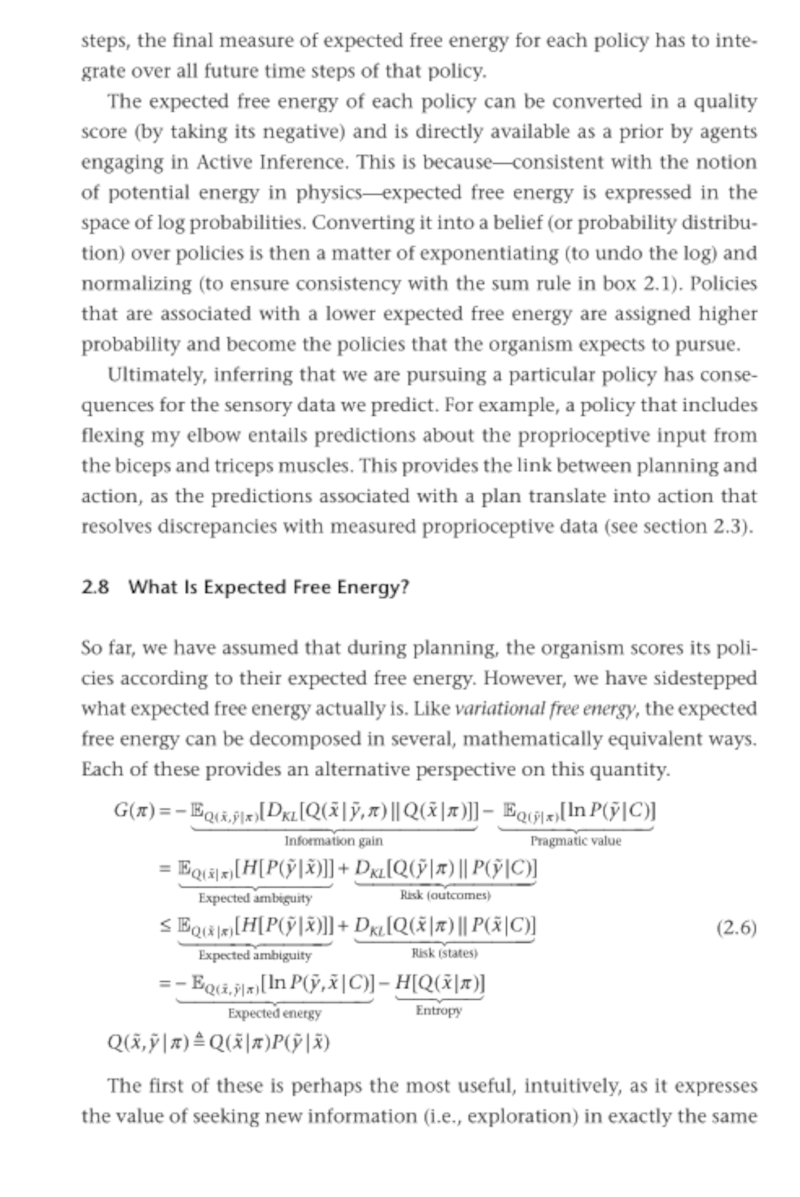

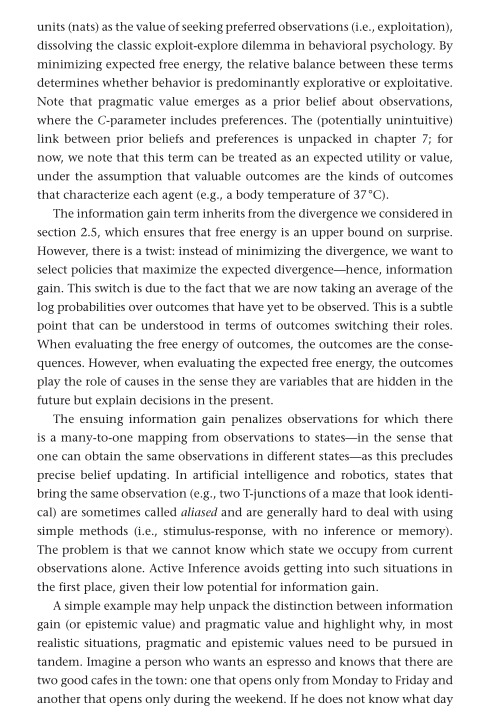

expected free energy of a policy

- exploration: the extent to which the policy is expected to resolve uncertainty

- exploitation: how consistent the predicted outcomes are with an agent’s goals

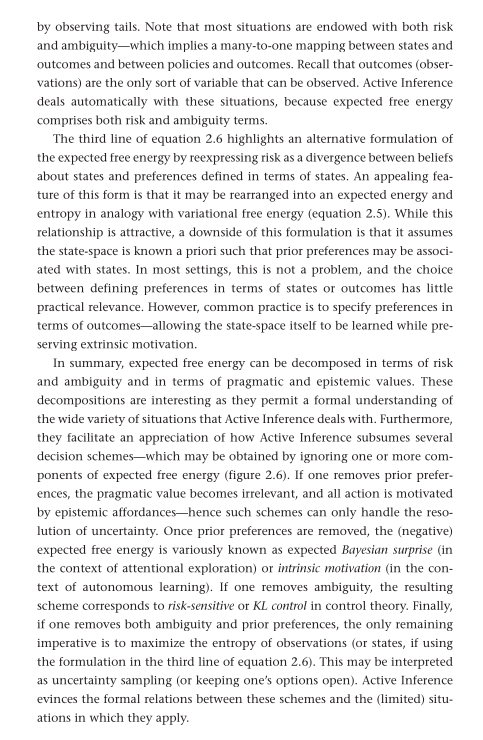

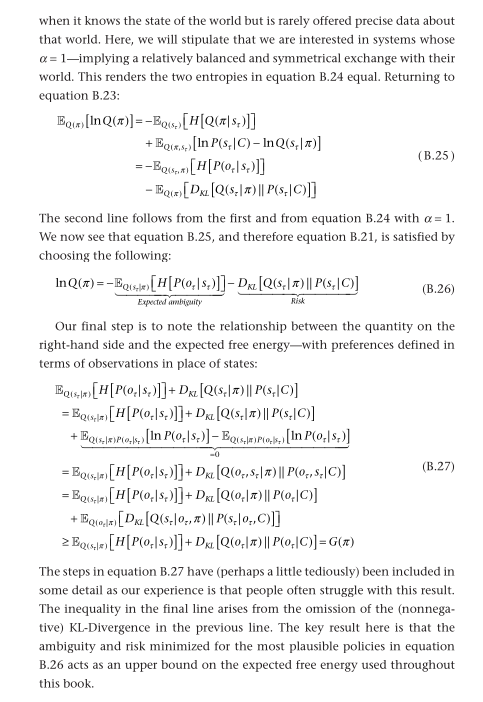

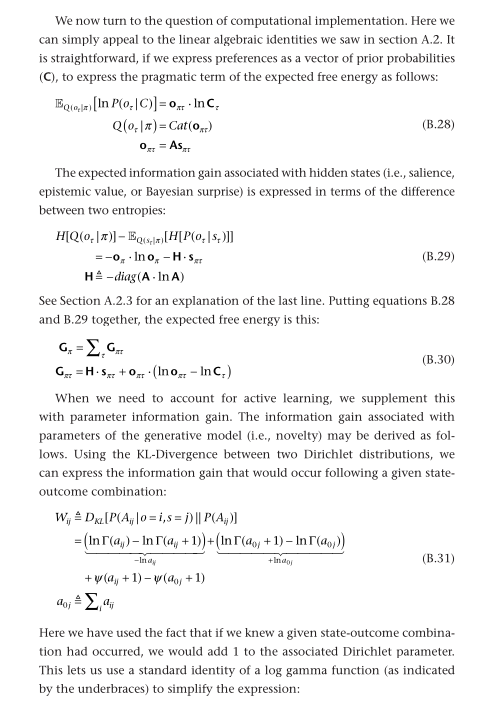

Equation 2.6 in the textbook

|  |  | |

|  |  |  |

| {$G(\pi) = E_{Q(s_\tau | \pi)}[H[P(o_\tau |s_\tau)]] + D_{KL}[Q(o_\tau|\pi)\parallel P(o_\tau |C)]$} | from Appendix B where {$s_\tau =\tilde{x}$} and {$o_\tau = \tilde{y}$} |

| {$G(\pi)= -E_{Q(\tilde{x},\tilde{y}|\pi)}[D_{KL}[Q(\tilde{x}|\tilde{y},\pi)\parallel Q(\tilde{x}|\pi)]] - E_{Q(\tilde{y}|\pi)}[\ln P(\tilde{y}|C)]$} | minus information gain (exploration) minus pragmatic value (exploitation) |

| {$G(\pi) = E_{Q(\tilde{x}|\pi)}[H[P(\tilde{y}|\tilde{x})]] + D_{KL}[Q(\tilde{y}|\pi)\parallel P(\tilde{y}|C)]$} | plus expected ambiguity plus risk (outcomes) |

| {$G(\pi)\leq E_{Q(\tilde{x}|\pi)}[H[P(\tilde{y}|\tilde{x})]] + D_{KL}[Q(\tilde{x}|\pi)\parallel P(\tilde{x}|C)]$} | plus expected ambiguity plus risk (states) |

| {$G(\pi)\leq -E_{Q(\tilde{x},\tilde{y}|\pi)}[\ln P(\tilde{y},\tilde{x}|C)] -H[Q(\tilde{x}|\pi)]$} | expected energy minus entropy |

where {$Q(\tilde{x},\tilde{y}|\pi)\equiv Q(\tilde{x}|\pi)P(\tilde{y}|\tilde{x})$}

| {$G(\pi)=E_{Q(\tilde{y}|\pi)}[\ln\frac{1}{P(\tilde{y}|C)}] - D_{KL}[Q(\tilde{y}|\tilde{x})Q(\tilde{x}|\pi)\parallel Q(\tilde{y}|\pi)Q(\tilde{x}|\pi)]$} | expected utility and intrinsic motivation |

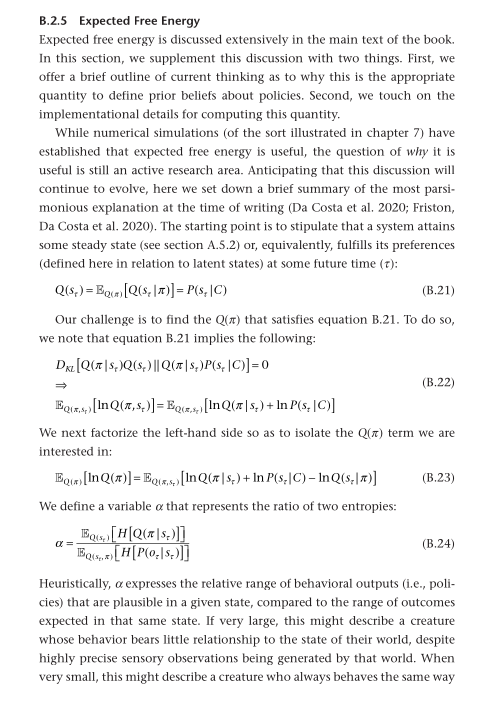

Appendix B in the textbook

|  |  |

{$Q(s_\tau )=E_{Q(x)}[Q(s_\tau | \pi)]=P(s_\tau | C)$}

{$D_{KL}[Q(\pi | s_\tau)Q(s_\tau) \parallel Q(\pi |s_\tau)P(s_\tau |C)] = \sum_\pi Q(\pi | s_\tau)Q(s_\tau) \ln \frac{Q(\pi | s_\tau)Q(s_\tau)}{Q(\pi |s_\tau)P(s_\tau |C)} = \sum_\pi Q(\pi, s_\tau) \ln \frac{Q(\pi, s_\tau)}{Q(\pi |s_\tau)P(s_\tau |C)} = E_{Q(\pi, s_\tau)} [ \ln Q(\pi, s_\tau) - \ln Q(\pi |s_\tau) -\ln P(s_\tau |C) ]$}

{$D_{KL}[Q(\pi | s_\tau)Q(s_\tau) \parallel Q(\pi |s_\tau)P(s_\tau |C)]=0 \Rightarrow E_{Q(\pi,s_\tau)}[\ln Q(\pi,s_\tau)]=E_{Q(\pi,s_\tau)}[\ln Q(\pi | s_\tau)+\ln P(s_\tau | C)]$}

{$E_{Q(\pi)}[\ln Q(\pi)]=E_{Q(\pi,s_\tau )}[\ln Q(\pi | s_\tau) + \ln P(s_\tau | C) - \ln Q(s_\tau | \pi)]$}

{$\alpha=\frac{E_{Q(s_\tau)}[H[Q(\pi | s_\tau)]]}{E_{Q(s_\tau,\pi)}[H[P(o_\tau | s_\tau)]]}$}

Assume {$\alpha=1$}, aligning {$P$} and {$Q$}, so that {$Q(s_\tau)H[Q(\pi | s_\tau)]=Q(s_\tau,\pi)H[P(o_\tau | s_\tau)]$} for all {$\tau$}, thus {$Q(s_\tau,\pi)=P(s_\tau,o_\tau,\pi)$} for all {$\tau$}.

{$E_{Q(\pi)}[\ln Q(\pi)]=-E_{Q(s_t)}[H[Q(\pi |s_\tau)]] + E_{Q(\pi,s_t)}[\ln P(s_\tau |C)-\ln Q(s_\tau |\pi)]$}

{$E_{Q(\pi)}[\ln Q(\pi)]=-E_{Q(s_t,\pi)}[H[P(o_\tau | s_\tau)]] - E_{Q(\pi)}[D_{KL}[Q(s_\tau |\pi)\parallel P(s_\tau |C)]]$}

satisfied by...

{$\ln Q(\pi)=-E_{Q(s_\tau |\pi)}[H[P(o_\tau | s_\tau)]]-D_{KL}[Q(s_\tau |\pi)\parallel P(s_\tau |C)]$}

continuing...

{$E_{Q(s_\tau |\pi)}[H[P(o_\tau |s_\tau)]]+D_{KL}[Q(s_\tau |\pi)\parallel P(s_\tau |C)] = E_{Q(s_\tau |\pi)}[H[P(o_\tau |s_\tau)]]+D_{KL}[Q(s_\tau |\pi)\parallel P(s_\tau |C)] $}

{$E_{Q(s_\tau |\pi)}[H[P(o_\tau |s_\tau)]]+D_{KL}[Q(s_\tau |\pi)\parallel P(s_\tau |C)] = E_{Q(s_\tau |\pi)}[H[P(o_\tau |s_\tau)]]+D_{KL}[Q(s_\tau |\pi)\parallel P(s_\tau |C)] + E_{Q(s_\tau |\pi)P(o_\tau |s_\tau)}[\ln P(o_\tau |s_\tau)] - E_{Q(s_\tau |\pi)P(o_\tau |s_\tau)}[\ln P(o_\tau |s_\tau)]$}

{$=E_{Q(s_\tau |\pi)}[H[ P(o_\tau |s_\tau)]] + D_{KL}[Q(o_\tau, s_\tau |\pi)\parallel P(o_\tau, s_\tau |C)]$}

{$=E_{Q(s_\tau |\pi)}[H[ P(o_\tau |s_\tau)]] + D_{KL}[Q(o_\tau |\pi)\parallel P(o_\tau |C)] + E_{Q(o_\tau |\pi)}[D_{KL}[Q(s_\tau |o_\tau,\pi) \parallel P(o_\tau, s_\tau | C)]]$}

{$\geq E_{Q(s_\tau | \pi)}[H[P(o_\tau |s_\tau)]] + D_{KL}[Q(o_\tau|\pi)\parallel P(o_\tau |C)]=G(\pi)$}

Literature

Karl J. Friston, Tommaso Salvatori et al. Active Inference and Intentional Behaviour.

Branching Time Active Inference: the theory and its generality

Stephen Francis Mann, Ross Pain, Michael D. Kirchhoff. Free energy: a user’s guide.