Three Minds (Theory Translator)

Kalman Filter

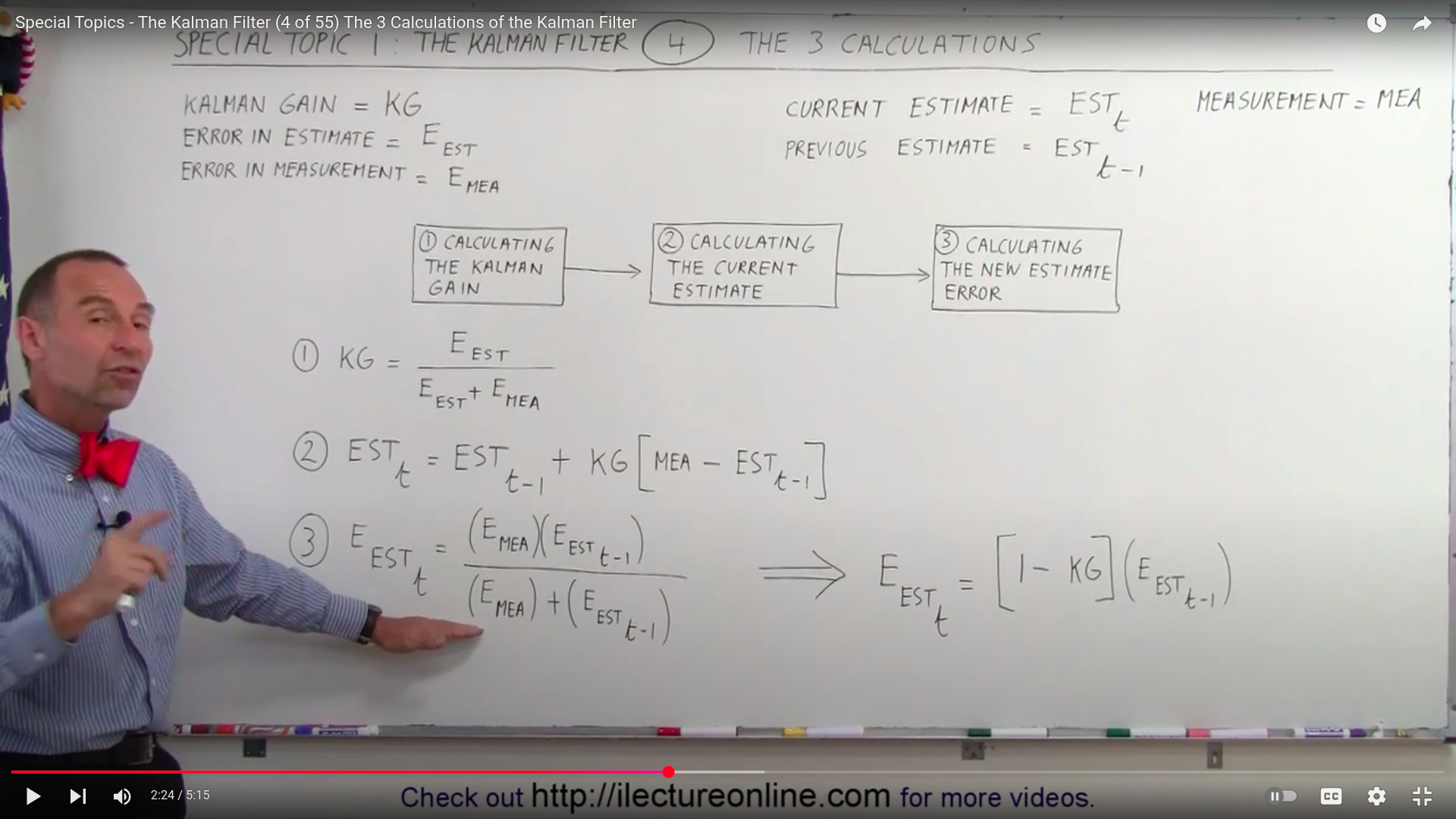

{$KG=\frac{Error_{estimate}}{Error_{estimate}+Error_{measurement}}$}

{$Estimate := Estimate + KG[Measurement - Estimate] = [1-KG]Estimate + KG[Measurement]$}

{$Error_{estimate} := \frac{Error_{measurement}Error_{estimate}}{Error_{estimate}+Error_{measurement}} = [1-KG]Error_{estimate}$}

Literature

Wikipedia: Free Energy Principle

Free energy minimisation and Bayesian inference

All Bayesian inference can be cast in terms of free energy minimisation[35][failed verification]. When free energy is minimised with respect to internal states, the Kullback–Leibler divergence between the variational and posterior density over hidden states is minimised. This corresponds to approximate Bayesian inference – when the form of the variational density is fixed – and exact Bayesian inference otherwise. Free energy minimisation therefore provides a generic description of Bayesian inference and filtering (e.g., Kalman filtering). It is also used in Bayesian model selection, where free energy can be usefully decomposed into complexity and accuracy:

{$ {\underset {\text{free-energy}}{\underbrace {F(s,\mu )} }}={\underset {\text{complexity}}{\underbrace {D_{\mathrm {KL} }[q(\psi \mid \mu )\parallel p(\psi \mid m)]} }}-{\underset {\mathrm {accuracy} }{\underbrace {E_{q}[\log p(s\mid \psi ,m)]} }}$}

Models with minimum free energy provide an accurate explanation of data, under complexity costs; cf. Occam's razor and more formal treatments of computational costs.[36] Here, complexity is the divergence between the variational density and prior beliefs about hidden states (i.e., the effective degrees of freedom used to explain the data).

AI says...

Kalman filters can be viewed as the steady-state solution of gradient descent on variational free energy within the framework of active inference. This means that the process of a Kalman filter minimizing estimation error by updating beliefs over time mirrors the free energy principle's core idea of minimizing surprise by minimizing variational free energy through a gradient-descent process. This establishes a deeper, formal link between a standard algorithm for optimal state estimation and an ambitious theory of brain function and behavior.